Guardrails within the realm of artificial intelligence (AI) are essential boundaries and ethical protocols that direct and confine AI operations to ensure they are conducted within safe, ethical, and precisely intended scopes. These mechanisms are especially pivotal in the educational sector, acting as the compass that guides AI to navigate through the extensive sea of data, guaranteeing that the content and tools powered by AI are not only accurate and appropriate but also relevant and enriching for learners.

Just as guardrails along a winding road provide safety and direction, preventing travelers from veering off course, guardrails in AI-enabled education serve a similar purpose—ensuring that the journey through learning and technology is both secure and aligned with our educational goals and values. In the context of education, the necessity for robust guardrails extends beyond the conventional realms of safety and ethics into the territory of information accuracy, educational alignment, and the mitigation of biases and hallucinations—where AI-generated content might deviate from factual accuracy or display biases if not correctly guided.

The Imperative for AI Guardrails in Education: Misinformation and Standards Alignment

The educational landscape is particularly susceptible to the challenges posed by misinformation and the critical need for alignment with specific educational standards. For example, without proper context, an AI might automatically align educational content with the Common Core State Standards (CCSS), disregarding the educator’s need for content that adheres to different standards, such as those in place in Florida. This misalignment can lead to educational content that, while technically accurate, is irrelevant to the students’ curriculum, highlighting the importance of guardrails that ensure AI applications are contextually aware and adaptable to various educational frameworks.

Confronting Bias: The Quest for Equitable AI

Moreover, the issue of bias and the risk of AI hallucinations—where AI fabricates information or images based on skewed data inputs—underscore the urgency for comprehensive guardrails. When an AI is tasked with creating an image of a teacher or a superintendent without specific guidelines, it might default to stereotypical representations: a white woman as a teacher, a white man as a superintendent.

These biases, embedded within the vast datasets that AI learns from, do not reflect the rich diversity of our global society nor the aspirations of inclusivity and equity in education. If AI is to contribute positively to educational environments, it must be equipped with guardrails that not only prevent such biases but also curtail the generation of hallucinated content that could mislead or misinform.

Crafting AI Guardrails for Educational Excellence

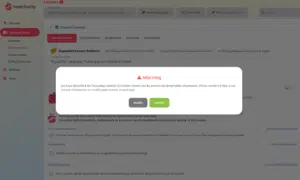

Recognizing the importance of these considerations, Teachally integrates stringent guardrails into its platform to facilitate the proper and responsible use of AI in educational settings. By doing so, Teachally not only addresses the potential pitfalls of misinformation, bias, and hallucinations but also ensures that its AI-driven tools and content are safe, inclusive, and meticulously aligned with the multifaceted needs of educators and students.

This commitment to crafting a platform underpinned by ethical AI practices exemplifies Teachally’s vision for a future where technology and education synergize to create learning experiences that are not only informative and engaging but also reflective of the values we cherish in an enlightened society.